In this edition of Adventures in Fabric, we explore orchestration in Microsoft Fabric through the lens of Apache Airflow. We share our early experience using Airflow as an offering within Fabric, outline key functionality, note current limitations, and discuss Microsoft’s recently released native Fabric Operator.

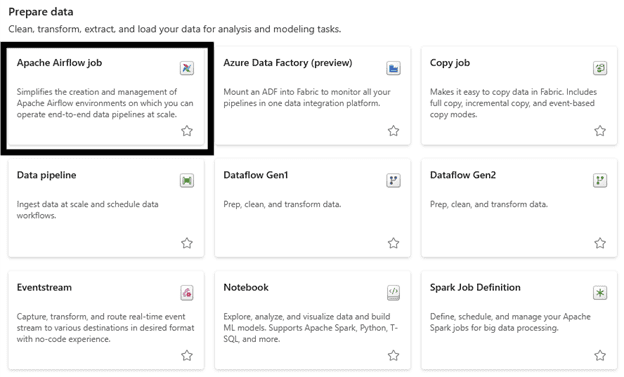

Some of you may be hearing about Airflow for the first time, while others may be familiar with it in its open-source capacity. The fact that Microsoft Fabric has Airflow embedded as a SaaS offering means we can use this orchestration tool without needing to set up and manage the underlying compute infrastructure.

Orchestration doesn’t often get the spotlight, but it is a foundational component of any robust ETL architecture. It ensures tasks are executed reliably, dependencies are managed, and workflows scale efficiently. Even the most advanced ETL processes are ineffective without proper orchestration to coordinate, automate, and manage execution.

In a nutshell, orchestration refers to the automation and coordination of tasks surrounding your ETL processes, sequencing activities in parallel or in series based on workflow requirements.

Key components of orchestration include:

- Scheduling

- Dependency management

- Error handling and recovery

- Monitoring and logging

- Scalability

Without orchestration, tasks need to be run manually, which is not sustainable or scalable. More importantly, orchestration should be data-driven, allowing workflows to scale and adapt through configurable parameters instead of hardcoded logic.

How to Run Everything

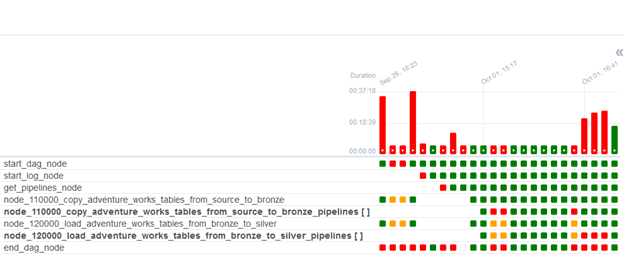

Data-driven orchestration relies on configuration to drive execution. Orchestration systems are responsible for selecting the correct parameters for each run, allowing the same code to operate efficiently across multiple tables in parallel. These parameters are stored in a configuration database, which the orchestrator uses to dynamically build and execute the workflow.

This architecture enables powerful capabilities. Adding a new table becomes as simple as inserting a new configuration record. These configurations can support event-based triggers, conditional task execution, dynamic sequencing, error handling, and more—all without rewriting code.

At SDK, we built our Fabric Manager Accelerator with this flexibility in mind. Our goal was to make the accelerator templates as reusable and scalable as possible. For example, we use a single notebook and code library to read an extract table (bronze layer) and merge it into a processed table (silver layer) while maintaining history on every record based on the business key (Type II).

Orchestration in Fabric Using Airflow

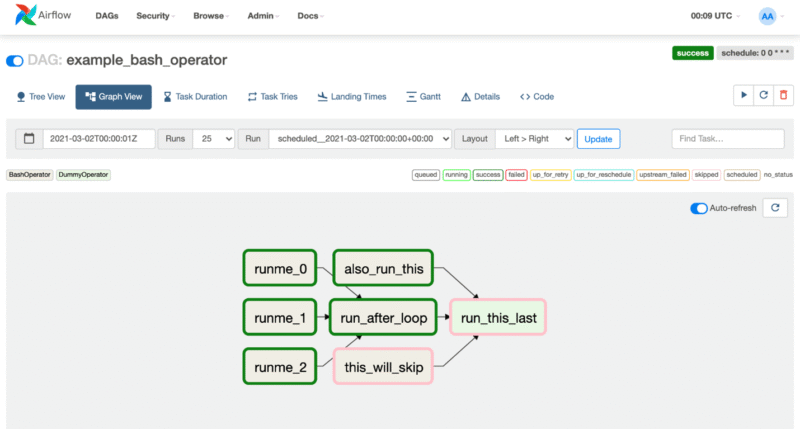

To support orchestration at scale, Microsoft Fabric includes Apache Airflow, signaling Microsoft’s recognition of its value in data pipeline management. Airflow is a widely adopted, fully code-driven orchestration tool. It uses Python to define DAGs (Directed Acyclic Graphs), enabling large-scale, controlled, and repeatable workflows.

Since the Airflow implementation runs within the Fabric ecosystem, it benefits from the same enhanced security and seamless interoperability as other Fabric components.

Out of the Box Capabilities

Out of the box, Airflow provides powerful monitoring and logging through an intuitive UI, along with built-in task recovery from any point of failure. If task 98 out of 100 fails, you can resolve the issue and resume from that point without re-running the entire pipeline. This level of recovery previously required custom engineering in other tools. With Airflow, this capability is included by default.

Tasks in Airflow can be dynamically executed based on configurations, simply by using Python to call Python APIs with parameters. Airflow is also integrated with Azure Key Vault, allowing us to keep confidential data secure and easily deploy different environments by updating only the Key Vault and configuration.

Early Observations: Fabric’s Integration of Airflow

At the time of writing this post, Fabric’s Airflow implementation has just exited public preview. While it does offer powerful orchestration, it’s still maturing. Here are a few limitations we have encountered:

Tool Maturity

Fabric’s web-based code editor is meant to be a more convenient alternative to the traditional local Airflow development, but it currently lacks key features:

- Renaming Files: You cannot rename files once created, even though there’s no reason from an Airflow perspective to lock in file names.

- Tabbing: The tab key does not work to tab next. If you work in Python, you understand the importance of tabbing.

- Interface: The overall UI of the web-based code editor falls short, which is especially frustrating given that Fabric’s notebook web UI is already so strong.

Cluster Management

Changing Airflow settings requires stopping and restarting the cluster. While dedicated and local Airflow environments offer quick stop/starts, Fabric’s implementation is slightly more finicky. Restarts take longer than expected, and on rare occasions the web UI gets stuck in a ‘starting’ state. The only solution we’ve found in such cases is to delete and recreate the workflow.

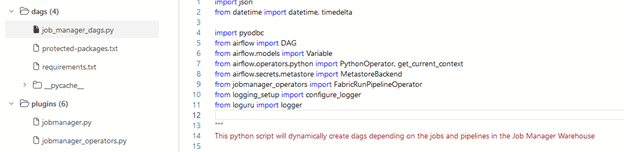

Git Support

Fabric’s Git integration is currently limited. While there is file-based support, DAGS and plugins must live in root-level folders named ‘dags’ and ‘plug-ins’. This is an antipattern for us at SDK, as we follow a defined folder structure for all repositories. The current setup also removes the ability to modify the code directly in the browser, forcing us to rely entirely on local development.

While tools like VS Code offer more control and flexibility for working with the DAG files, it’s still valuable to have the option of editing in the browser. Git integration improvements are on Microsoft’s roadmap, and we’re eager to see those updates.

What’s Next: Exploring the Native Fabric Operator

Airflow defines workflows as Directed Acyclic Graphs (DAGs), where each task is represented by an Operator. Operators are Python classes that abstract the logic of individual tasks, such as calling an API, running a SQL query, or executing a Python function, making them reusable and easy to manage within a DAG.

While generic Operators work for basic tasks, most major platforms such as Databricks, Snowflake, and Azure Data Factory have contributed custom Operators to the Airflow project to simplify integration. Databricks provides Job Run Operators, Snowflake has a suite, and Microsoft itself has custom Operators to work with the Azure Data Factory APIs.

During SDK’s development of our Fabric Manager Accelerator, Microsoft had not yet released a Fabric-specific Operator to execute and monitor Fabric objects. The APIs were available, so we created our own. However, our operator requires the use of an unattended service without MFA, as the Fabric APIs were not supported with SPN. We developed a workaround that allows an SPN to assume the credentials of a service account and execute the tasks as that account. However, when using the MSAL for Python library to retrieve credentials from Entra, the process fails if MFA is enabled.

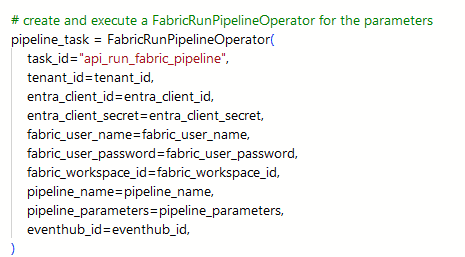

Microsoft has since released a native Fabric Operator, and we are excited to explore its capabilities. While it does not fully resolve the challenge of requiring a service principal (SPN) to assume the role of a user account, it introduces a potential path forward by supporting MFA through delegated credentials, as outlined in the Microsoft Learn article, Tutorial: Run a Fabric data pipeline and notebook using Apache Airflow DAGs.

Additionally, Microsoft’s approach of enforcing synchronous pipeline execution, where Airflow waits until the execution is complete, may offer better performance on the Airflow cluster compared to SDK’s current method. With the native Operator now available, we are eager to test the Fabric Operator against our own in-house solution.

Closing Thoughts

At SDK, we’re confident in the long-term value of Apache Airflow as an offering in Microsoft Fabric and excited to work with a powerful, community-driven orchestration tool built directly into the ecosystem. While some limitations still exist, Microsoft’s ongoing investment in Fabric reinforces our trust in its direction. Since SDK’s Fabric Manager Accelerator is already built on an Airflow backbone, we’re closely tracking every update and look forward to the new features and improvements ahead.