It’s funny how it goes. You ask for choices, and then feel overwhelmed when you get them. You think, “Wow, that’s too many choices. How do I choose?”

Well, it’s kind of like that in Microsoft Fabric. You have all the tools at your fingertips: dataflows, data pipelines, notebooks, and eventstreams. But now the real question is, which tool do you use, and when?

Right Tool, Right Job

- Dataflows in Power BI

Dataflows have been available in Power BI for a while. They serve as a powerful tool for extracting, transforming, and loading (ETL) data. Developers use a graphical interface that enables users to transform data using a drag-and-drop approach. This low-code/no-code environment is especially appealing to citizen developers and those who may not have deep coding expertise. While the simplicity of the interface is a great advantage for enabling rapid development and quick access to data, it does mean that dataflows lack some of the advanced features found in more robust ETL tools.

- Data Pipeline

Think of a data pipeline as the overarching structure that connects various tasks. It serves as the control layer (akin to the control pane in SQL Server Integration Services) and provides the “plumbing” through which data moves. While the content running through the pipeline is the actual data, the pipeline itself handles how, when, and where data processing tasks occur. Data pipelines are highly versatile and effective for managing templates, executing notebooks, and orchestrating data workflows. One outstanding feature is the Copy Data task, which is often used for data ingestion. The Copy Data functionality streamlines transferring data between different sources and destinations while ensuring data consistency and accuracy.

- Notebooks

Notebooks offer a highly flexible and interactive environment for writing and executing code. They are primarily used for coding in Python but also support Spark SQL commands, enabling a mix of programming paradigms to suit different use cases. The notebook format is particularly advantageous for data scientists and developers who need maximum flexibility when working within the Fabric ecosystem. Notebooks are ideal for writing custom scripts for complex data manipulation, machine learning, or advanced analytics. They provide far more control and precision than low-code tools.

- Eventstreams

Eventstreams are specifically designed to handle real-time data processing, focusing primarily on “insert-only” operations. They are ideal for working with transactional data that requires minimal transformation. Eventstreams excel in scenarios where immediate and continuous ingestion of data is essential, such as monitoring IoT sensors, capturing live user interactions, or processing financial transactions. Their streamlined nature ensures low-latency data handling, which is critical for applications that rely on up-to-the-moment insights.

- Semantic Models

This is the final stage, where everything comes together and users interact with the data. Aggregates and calculations are created using DAX. Although additional transformations can be done in semantic models, we keep those to a minimum, only adding measures and calculations to that support the drag-and-drop functionality of Power BI.

- Wheel Files

This is not a standard in Fabric. It is an advanced feature derived from Python development practices. In fact, it’s not a Fabric tool at all, but rather a supported capability. Wheel files bundle repeatable code into a package that developers can call from within notebooks. By applying object-orientated best practices, we reduce the amount of code needed in notebooks, increase reusability, and lower maintenance costs.

What Tools We Choose

The Job: Data Ingestion from Source

- Data pipelines are the clear choice here. With the Copy Data task supporting tons of connectors, you can ingest data using full or incremental loads.

- Together with our Fabric Manager framework, we can use dynamic inputs to loop through multiple tables using a small set of parameter-driven code.

- Watermarks for incremental data loading are stored in a table and used to minimize redundant data throughput.

- As of March 2025, the new Copy Job task simplifies this even further. At SDK, we’ve just added this to our feature map. Currently in preview, it will come with out-of-the-box incremental loading capabilities.

The Job: Moving from Source to Silver

- We use notebooks to call our wheel file. This approach provides the full flexibility of custom code while keeping our notebooks simple, readable, and maintainable.

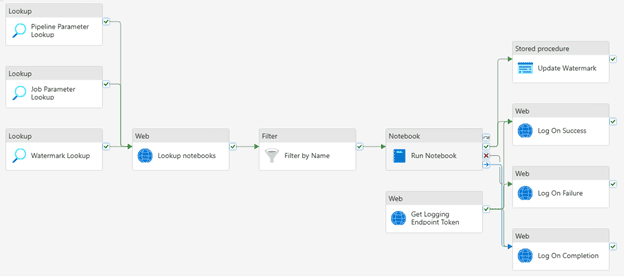

- The notebook is then embedded in a pipeline that takes in the parameters and logs all outcomes

The Job: Moving from Silver to Gold

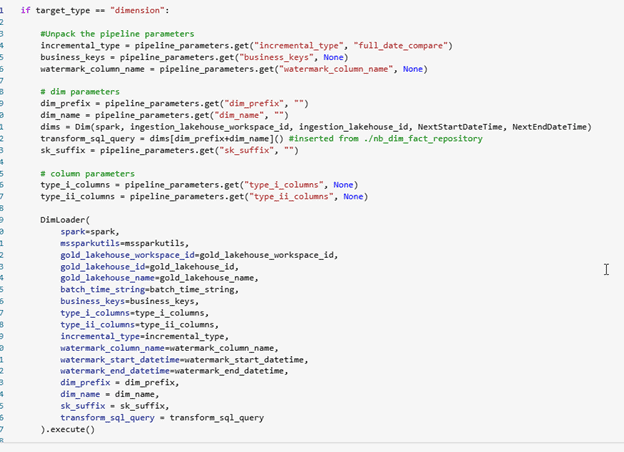

- Using the same approach as source to silver, we use a notebook that calls our wheel file to implement the dimension and fact tables.

- The notebook is then run by the same data pipelines as above.

- This connects to a configuration notebook where the dimension or fact logic is written in Spark SQL

The Job: Semantic Refresh

- We have chosen the import data approach for our sematic model. Althouh there are use cases for Direct Lake and Direct Query, the best report performance and interaction still come from data import. This approach, however, does require data refreshes.

- These can be scheduled independently from other tools, but we choose to be consistent and use a data pipeline with the semantic model refresh activity to schedule it with the rest of our data movement workflows.

The Job: Logging

- We log to the eventhouse throughout the entire solution.

- In data pipelines, this is done with a web task. In notebooks, we capture metrics like duration and errors using Loguru. These logs are than surfaced in a Power BI report so operations asses the system health.

Lessons Learned

- One shortcoming is that each connection must be hard coded in Fabric data pipeline when using the Copy task. In Azure Data Factory, this can be a parameter, meaning that we can reuse one pipeline for all sources of the same type.

- For example: I build one SQL pipeline and reuse it for all SQL databases, but in Fabric I need to create a pipeline and a connection for each separate source. As I move through my additional environments, each one must have its own connection as well. At scale, this means a lot of duplication of pipelines and a lot of connections to manage.

- SPN access has just expanded again, and we’re looking forward to adding that in as well.

What We’re Going to Explore Next

We’re always keeping an eye on what’s new in Fabric, and a few recent features have caught our attention:

- Job Task for Full Database Ingestion: This new task streamlines the process of ingesting an entire database into the bronze layer with a single operation. It promises major efficiency gains for initial data loads and could significantly reduce setup time.

- Variable Libraries for Environment Management: Recently released, variable libraries offer a centralized way to manage environment-specific configurations. We’re excited to see how this feature can simplify deployments and reduce errors when moving solutions across dev, test, and production environments.

Wrapping Up

With so many tools available in Microsoft Fabric, success comes down to knowing when and how to use each one. From low-code dataflows to powerful notebooks and real-time eventstreams, every piece plays a role in building scalable and intelligent data solutions.

As new features roll out and capabilities expand, we’ll continue exploring how to get the most out of Fabric. There’s always more to discover in Fabric, so keep an eye out for the next installment in our Adventures in Fabric series.

Ursula Pflanz, Principal Architect & Head of Innovation at SDK